october, 7th

Variations of AI in Game Development

Advanced algorithms are revolutionizing game testing as AI technology changes the game creation process. AI is becoming a necessary tool for anything from identifying visual flaws to modeling player behavior. But AI isn't perfect, and human testers are still an essential part of the process. This article delves into particular game development fields, the kinds of AI models applied there, and the future directions of this field of study.

Scripting and In-Game Logic Testing – Transformers and GPT Models

In modern game development, creating dynamic and branching dialogues, quest structures, or even basic game logic is crucial. Transformers, such as GPT-based models, have emerged as effective tools for automating these tasks. They assist developers by:

Generating and testing dialogue: LLM models can create contextually relevant dialogue for NPCs, ensuring the conversations flow naturally and respond accurately to player input.

Automating scripting for quest and scenario generation: For games with procedurally generated quests or levels, LLMs can help by writing scripts that ensure variety while following a coherent storyline.

Testing branching narratives: Transformers, specifically large language models (LLMs) like GPT or BERT, are used to simulate different player decisions and how they affect the game world. These models can explore various narrative paths and detect logical inconsistencies or issues within complex branching storylines.

Example Application: In an RPG, a Transformer-based AI, such as GPT-4o, can generate and evaluate multiple quest paths and dialogue options for NPCs, testing whether the narrative structure remains coherent across diverse player choices and interactions. Paper: Character Decision Points Detection (CHADPOD).

Texture and Image Production Using Adversarial Generative Networks

In procedural content creation, Generative Adversarial Networks (GANs) are frequently utilized, especially for creating textures, landscapes, and other visual components. Commonly used models are Image-to-texture Synthesis, Text-to-texture. Their main applications include:

Procedural texture generation: GANs generate high-quality textures for environments, characters, and objects, reducing the workload for human artists while ensuring variety across levels.

Dynamic landscape creation: GANs are employed to generate vast landscapes with diverse topographies, allowing for dynamic environments that remain visually coherent.

Character and object design: GANs help in automatically generating unique NPCs or props based on training data, ensuring a consistent aesthetic while maintaining randomness in large, open-world games.

Example Application: A GAN could generate unique textures for different types of terrain in a fantasy open-world game, ensuring that no two areas look identical while adhering to the overall visual theme. A good example is the FlexiTex model based on texture generation from text and pictures

AI opponents and gameplay mechanics: Reinforcement Learning

AI opponents and gaming mechanics are tested and optimized using Reinforcement Learning (RL) models. RL models require a huge amount of power even for simple games. Huge number of iterations, where each iteration is a running game process. Since their main objective is to learn from interactions with the gaming world, they are the perfect choice for:

AI opponent training and balancing: RL models simulate player behavior and fine-tune AI opponents’ difficulty, ensuring that they challenge human players without being too overpowering or predictable.

Testing gameplay mechanics: RL agents can experiment with game mechanics, like platforming, physics-based puzzles, or resource management systems, identifying potential exploits or areas of imbalance.

Level progression optimization: RL models analyze how players navigate through levels, providing insight into whether the difficulty curve is appropriate.

Example Application: In a strategy game, an RL agent can simulate an AI opponent that adjusts its tactics based on player actions, ensuring a competitive yet fair experience. Check out this video from OpenAI on creating an RL-based MultiAgent.

In modern game development, creating dynamic and branching dialogues, quest structures, or even basic game logic is crucial. Transformers, such as GPT-based models, have emerged as effective tools for automating these tasks. They assist developers by:

Generating and testing dialogue: LLM models can create contextually relevant dialogue for NPCs, ensuring the conversations flow naturally and respond accurately to player input.

Automating scripting for quest and scenario generation: For games with procedurally generated quests or levels, LLMs can help by writing scripts that ensure variety while following a coherent storyline.

Testing branching narratives: Transformers, specifically large language models (LLMs) like GPT or BERT, are used to simulate different player decisions and how they affect the game world. These models can explore various narrative paths and detect logical inconsistencies or issues within complex branching storylines.

Example Application: In an RPG, a Transformer-based AI, such as GPT-4o, can generate and evaluate multiple quest paths and dialogue options for NPCs, testing whether the narrative structure remains coherent across diverse player choices and interactions. Paper: Character Decision Points Detection (CHADPOD).

Texture and Image Production Using Adversarial Generative Networks

In procedural content creation, Generative Adversarial Networks (GANs) are frequently utilized, especially for creating textures, landscapes, and other visual components. Commonly used models are Image-to-texture Synthesis, Text-to-texture. Their main applications include:

Procedural texture generation: GANs generate high-quality textures for environments, characters, and objects, reducing the workload for human artists while ensuring variety across levels.

Dynamic landscape creation: GANs are employed to generate vast landscapes with diverse topographies, allowing for dynamic environments that remain visually coherent.

Character and object design: GANs help in automatically generating unique NPCs or props based on training data, ensuring a consistent aesthetic while maintaining randomness in large, open-world games.

Example Application: A GAN could generate unique textures for different types of terrain in a fantasy open-world game, ensuring that no two areas look identical while adhering to the overall visual theme. A good example is the FlexiTex model based on texture generation from text and pictures

AI opponents and gameplay mechanics: Reinforcement Learning

AI opponents and gaming mechanics are tested and optimized using Reinforcement Learning (RL) models. RL models require a huge amount of power even for simple games. Huge number of iterations, where each iteration is a running game process. Since their main objective is to learn from interactions with the gaming world, they are the perfect choice for:

AI opponent training and balancing: RL models simulate player behavior and fine-tune AI opponents’ difficulty, ensuring that they challenge human players without being too overpowering or predictable.

Testing gameplay mechanics: RL agents can experiment with game mechanics, like platforming, physics-based puzzles, or resource management systems, identifying potential exploits or areas of imbalance.

Level progression optimization: RL models analyze how players navigate through levels, providing insight into whether the difficulty curve is appropriate.

Example Application: In a strategy game, an RL agent can simulate an AI opponent that adjusts its tactics based on player actions, ensuring a competitive yet fair experience. Check out this video from OpenAI on creating an RL-based MultiAgent.

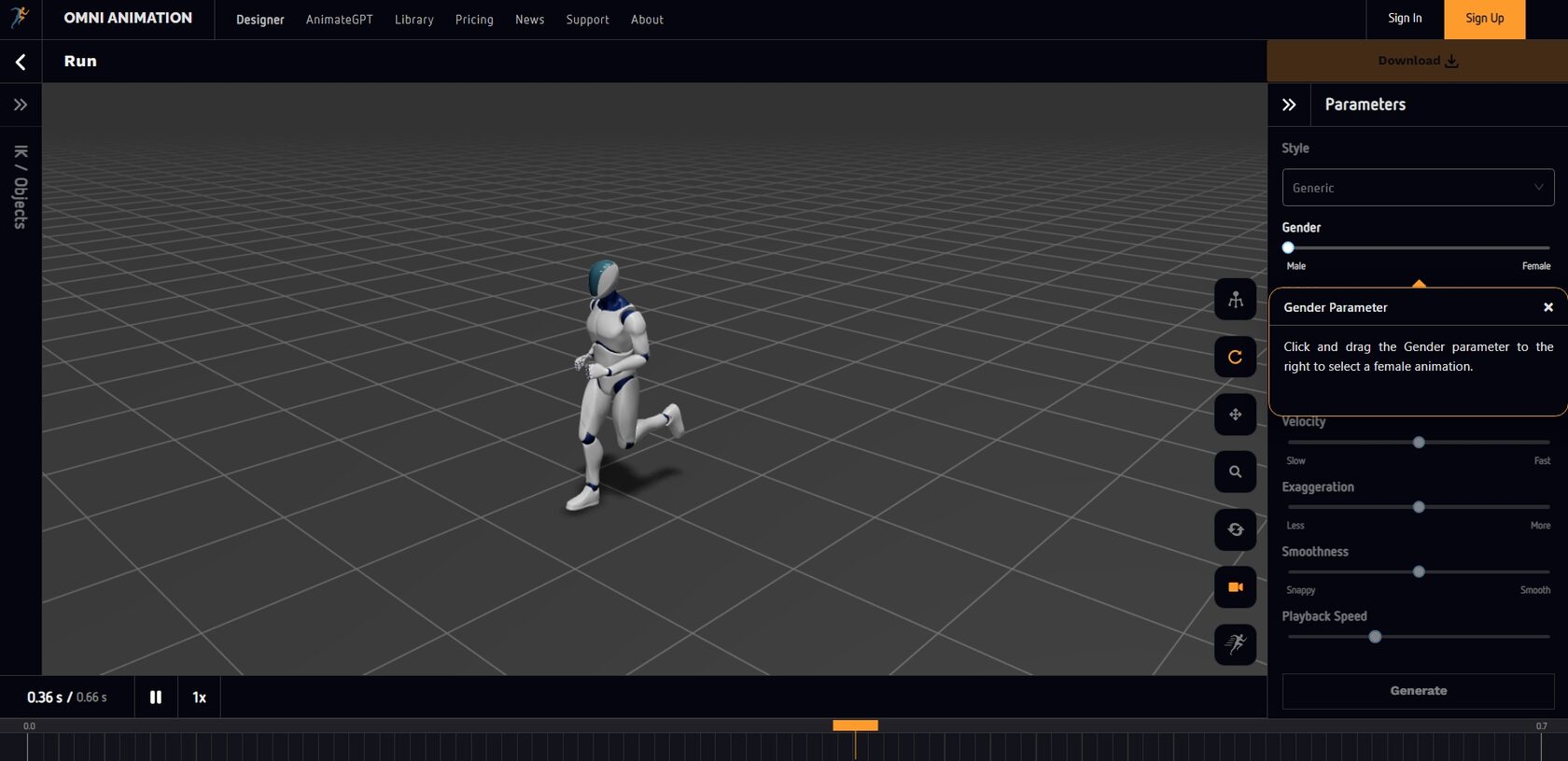

Animation and NPC Behavior – Decision Trees and Transformers

For in-game character behaviors, particularly in terms of NPC actions and animations, decision trees and Transformers are heavily used. These models can handle:

NPC pathfinding and behavior modeling: Decision trees control basic NPC behaviors, such as movement, interaction, and response to player actions. Transformers handle more complex decision-making, like how NPCs should react emotionally to players' actions.

Testing character animations: Transformers are capable of assessing motion-capture data and helping to adjust animations so that they seem natural and smooth.

Procedural animation: Without pre-programmed sequences, AI systems create animations in real time in response to user input, enabling more dynamic and responsive gameplay.

Example Application: In a stealth game, AI-driven NPCs use decision trees for movement and patrolling, while Transformers generate more nuanced reactions based on player behavior, like spotting the player or hearing suspicious sounds. There is a company called Omni Animation that offers their AnimateGPT model (in conjunction with LLM) that offers to create animations. It's too early to talk about generating animations inside games.

For in-game character behaviors, particularly in terms of NPC actions and animations, decision trees and Transformers are heavily used. These models can handle:

NPC pathfinding and behavior modeling: Decision trees control basic NPC behaviors, such as movement, interaction, and response to player actions. Transformers handle more complex decision-making, like how NPCs should react emotionally to players' actions.

Testing character animations: Transformers are capable of assessing motion-capture data and helping to adjust animations so that they seem natural and smooth.

Procedural animation: Without pre-programmed sequences, AI systems create animations in real time in response to user input, enabling more dynamic and responsive gameplay.

Example Application: In a stealth game, AI-driven NPCs use decision trees for movement and patrolling, while Transformers generate more nuanced reactions based on player behavior, like spotting the player or hearing suspicious sounds. There is a company called Omni Animation that offers their AnimateGPT model (in conjunction with LLM) that offers to create animations. It's too early to talk about generating animations inside games.

Screenshot and video by Opsive

Audio and music testing – RNNs (Recurrent Neural Networks)

Recurrent Neural Networks (RNNs) are especially effective for time-series data, which makes them advantageous in audio and music generation and testing.

In game development, they manage:

Dynamic music generation: RNNs create music that adapts in real-time based on in-game events or player actions, such as increasing tension during combat or creating a calm atmosphere in peaceful areas.

Audio asset testing: RNNs can test whether audio cues are synchronized with visual events, such as sound effects matching explosions or footsteps.

Procedural sound generation: RNNs generate sound effects on the fly, creating variations that enhance immersion without repetitive audio loops.

Example use: In horror games, an RNN can be used to dynamically generate eerie soundscapes that change depending on where the player is in relation to specific events or objects, building tension.

Convolutional Neural Networks

CNNs can be used to analyze what is happening on the screen. To analyze the player's movement and to detect visual faults. For example, in Pyxidis, we analyze the emotions of the player and what is happening on the screen thanks to CNN and multimodal models.

CNNs are excellent at detecting graphical major anomalies such as artifacts or visual glitches in live or recorded games. The only issue is training such models, as the amount of variation in framerate issues and game styles can be huge.

UI/UX testing is needed to evaluate the user interface by identifying inconsistencies in it, such as misplaced buttons or overlapping text. There are many startups that allow you to evaluate the friendliness of UI design. There are no such technologies for games yet.

Object collision and clipping detection: CNNs monitor game scenes for issues where objects improperly intersect or characters move through walls.

Example Application: During testing of a 3D adventure game, a CNN could scan frames for any texture pop-in or graphical glitches, ensuring that visual fidelity remains consistent across various hardware setups.

Recurrent Neural Networks (RNNs) are especially effective for time-series data, which makes them advantageous in audio and music generation and testing.

In game development, they manage:

Dynamic music generation: RNNs create music that adapts in real-time based on in-game events or player actions, such as increasing tension during combat or creating a calm atmosphere in peaceful areas.

Audio asset testing: RNNs can test whether audio cues are synchronized with visual events, such as sound effects matching explosions or footsteps.

Procedural sound generation: RNNs generate sound effects on the fly, creating variations that enhance immersion without repetitive audio loops.

Example use: In horror games, an RNN can be used to dynamically generate eerie soundscapes that change depending on where the player is in relation to specific events or objects, building tension.

Convolutional Neural Networks

CNNs can be used to analyze what is happening on the screen. To analyze the player's movement and to detect visual faults. For example, in Pyxidis, we analyze the emotions of the player and what is happening on the screen thanks to CNN and multimodal models.

CNNs are excellent at detecting graphical major anomalies such as artifacts or visual glitches in live or recorded games. The only issue is training such models, as the amount of variation in framerate issues and game styles can be huge.

UI/UX testing is needed to evaluate the user interface by identifying inconsistencies in it, such as misplaced buttons or overlapping text. There are many startups that allow you to evaluate the friendliness of UI design. There are no such technologies for games yet.

Object collision and clipping detection: CNNs monitor game scenes for issues where objects improperly intersect or characters move through walls.

Example Application: During testing of a 3D adventure game, a CNN could scan frames for any texture pop-in or graphical glitches, ensuring that visual fidelity remains consistent across various hardware setups.

Screenshot from Pyxidis Platform with emotion analysis

Performance and Network Optimization – Deep Q-Networks (DQN) and Reinforcement Learning

To ensure network performance and optimization in multiplayer or resource-heavy games, Deep Q-Networks (DQN) and RL models are employed. These models specialize in:

Latency and network testing: RL models help simulate real-world network conditions, identifying potential lag, packet loss, or server issues in multiplayer games.

Performance optimization: DQN models can adjust resource allocation dynamically, ensuring smooth gameplay even under high demand, such as in large-scale online battles.

Server load testing: AI can simulate thousands of players interacting with game servers to test how well they hold up under peak conditions.

Example Application: In a battle royale game, DQN models could be used to test how server performance degrades as more players join a match, helping developers optimize for better scalability.

Future of AI in Game Testing

As AI research progresses, several key areas will drive the next wave of innovation in game testing:

At Pyxidis, we're dedicated to incorporating the most recent advancements in AI-driven game testing, guaranteeing a harmonious combination of automation and human judgment for optimal game quality. Keep checking back as we continue to push the limits of artificial intelligence in game development.

To ensure network performance and optimization in multiplayer or resource-heavy games, Deep Q-Networks (DQN) and RL models are employed. These models specialize in:

Latency and network testing: RL models help simulate real-world network conditions, identifying potential lag, packet loss, or server issues in multiplayer games.

Performance optimization: DQN models can adjust resource allocation dynamically, ensuring smooth gameplay even under high demand, such as in large-scale online battles.

Server load testing: AI can simulate thousands of players interacting with game servers to test how well they hold up under peak conditions.

Example Application: In a battle royale game, DQN models could be used to test how server performance degrades as more players join a match, helping developers optimize for better scalability.

Future of AI in Game Testing

As AI research progresses, several key areas will drive the next wave of innovation in game testing:

- Unsupervised Learning

- Multi-Modal AI

- Self-Supervised Learning

- Neurosymbolic AI

At Pyxidis, we're dedicated to incorporating the most recent advancements in AI-driven game testing, guaranteeing a harmonious combination of automation and human judgment for optimal game quality. Keep checking back as we continue to push the limits of artificial intelligence in game development.